Email remains the most widely used form of business communication—and consequently, a prime target for cybercriminals. Originally designed without security or privacy in mind, email has long been vulnerable, prompting the rise of security solutions to protect this vital channel. Early defenses relied heavily on signature and reputation-based detection, evolving over time into sandboxing and dynamic analysis, which initially proved effective.

However, cybercriminals have advanced faster than these defenses. In recent years, attackers have shifted from delivering “trusted” content designed to fool security systems to leveraging sophisticated identity deception aimed at tricking individuals into trusting malicious emails. Traditional email security focuses on analyzing message content and sender reputation, but cybercriminals have adapted by using impersonation tactics to manipulate recipients directly, bypassing many existing protections.

A New Day, A New Email Threat

Modern email attacks increasingly rely on identity deception to bypass traditional defenses like Secure Email Gateways (SEGs). At the core of these attacks is impersonation, where threat actors send messages that appear to come from a trusted individual, organization, or brand — convincing recipients to take harmful actions such as initiating wire transfers or sharing sensitive information.

Attackers often use evasive tactics, including sending emails from reputable services like Gmail or Outlook, employing convincing pretexts to build trust, and deploying sandbox-aware malware or sometimes no malicious payload at all. These evolving techniques are growing rapidly, amplifying the risk of significant financial loss and reputational damage, often impacting C-level executives or even board members.

The most common form of identity deception is the Display Name Attack. Because popular email services like Gmail, Yahoo!, and Outlook allow users to freely set the display name in the "FROM" field, attackers can easily insert the name of a trusted figure, such as a CEO or a familiar brand like a bank, making this attack simple and cost-effective.

More sophisticated deception includes domain spoofing, where attackers mimic the recipient’s own domain using lookalike domains, and Account Takeover (ATO) attacks, in which a legitimate email account is compromised and used to launch highly targeted and convincing phishing campaigns.

How Does Machine Learning Strengthen Cybersecurity Defenses?

To understand how data science works behind the scenes, it’s helpful to break down the specific parts of an email that are analyzed and how machine learning models are applied. This includes various components embedded within the email header, such as:

- “From” field, or Sender Display Name

- "To" field, or Recipient Display Name

- Subject Line

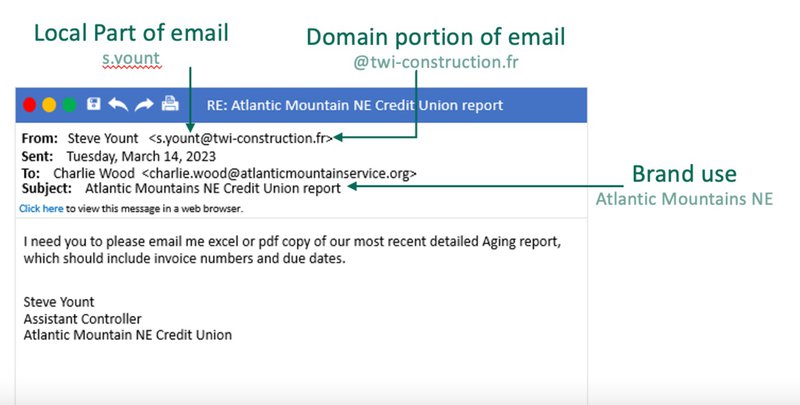

- Local Part, or Email Prefix (see image below)

- Email Sending Domain of Webmail Address (see image below)

In addition, the models scan any contextual data like text and metadata. These include:

- The sender’s infrastructure

- The number of days IP address has been used to send from on behalf of domain

- The number of emails sent using the same Local Part of email

- The number of emails using the same Display Name

- Intent of the email (examine nature of content, like Subject Line)

- Matching Address Group and Display Name

- Character text used in the Local Part of email (Latin vs. Cyrillic)

- SPF/DKIM/DMARC records

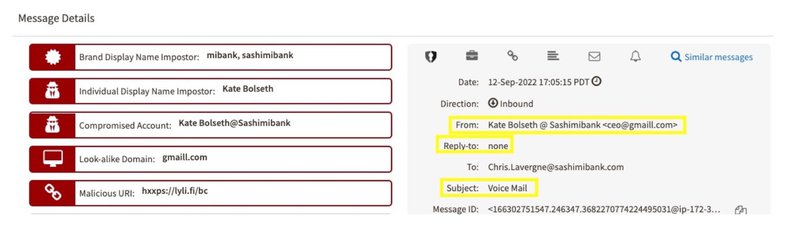

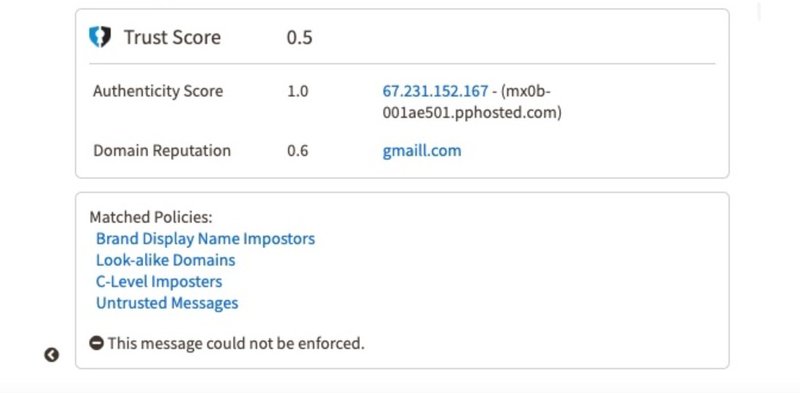

Once all of this data is evaluated and scored, Fortra DMAC Protection produces reputation and aAuthenticity scores. This basically determines if the email was part of a previously unidentified targeted attack, or if it was simply an anomaly. For example, if there was an email that received a very low Authenticity score but a high Reputational score, the models would suggest that there was likely a spoof of a highly reputed domain. The models will then output a “final” score based on the unique combination between the two model scores in the customer’s UI dashboard. In this example of Kate Bolseth@SashimiBank below, the threat actor used a lookalike domain for Gmail (gmaill.com>) with a generic Local Part/prefix (<ceo), which would generally be missed by the naked eye. However, Fortra's machine learning models caught it via the identified policies listed and gave it an overall low trust score of 0.5 based on the combination of a low authenticity score, as well as the poor domain reputation of gmaill.com, as shown by the below:

Though every machine learning paradigm has strengths and weaknesses, it continues to push forward and create opportunities to perform tasks more accurately and efficiently. For these reasons, it is critical to understand the task objective and select the ideal machine learning paradigm to perform the task well. This is especially true for applications of machine learning that can impact the operation of business processes, which could potentially bottleneck the flow of inbound and outbound communications. This is why Agari Phishing Defense leverages a pre-train and fine-tune paradigm, adapting pragmatic pre-trained models to various downstream tasks, like:

- Analyzing specific text-based email components, such as Subject Lines and body content

- Task-specific functions, such as determining message type or level of suspiciousness

- Identifying groupings or clusters in email data

- Creating data lakes with labeled data that can be used by other models

- Ingesting feeds of useful data–like lists of suspicious domains and IP address groups

AI is no longer the future of email security; it is the present. Traditional email defenses struggle to detect impersonation and social engineering, allowing BEC and credential theft attacks to reach user inboxes at an alarming rate. Stopping these threats at enterprise scale demands the use of data science to determine whether messages should be trusted. This is where Fortra's Cloud Email Protection is at the forefront–driving state-of-the-art data science with cutting-edge research on emerging known and unknown email threats. We know how modern email threats work inside and out, and we use that insight to design robust models that can detect these threats in the most challenging enterprise environments.